Accomable is a website which allows disabled travellers to find and book accessible accommodation. [Edit: Accomable were acquired by Airbnb Nov 2017]. They were interested in assessing how they measured up to market standard metrics, and also what drives the number of bookings, a key driver of growth in their business.

Metrics

Accomable was interested in tracking metrics recommended by a VC firm for market places. The metrics look at buyers, sellers and the overall market place to understand how its growing and what could fuel further growth. I wrote Stata code to when enabled Accomable to select the months of interest and which populated a spreadsheet of the metrics broken down by month for the months selected. This involved looping over the selected months for each calculation and using “putexcel” to refresh the spreadsheet.

Modelling Booking

One of the most important questions at Accomable is “what drives the number of bookings?” More bookings means more customers finding what they need on the site, and ultimately drives profit.

I was interested in answering this question from both the travellers’ and hosts’ perspective as the decision to book will be a combination of factors. Some of that data we didn’t have access to, for example, the travellers’ specific circumstances at the time, for example, whether they’d just got a windfall or just really needed a break. But we had data about the travellers’ interaction with the site which is what is within Accomable’s locus of control anyway. The decision to book a particular property of course also brings in the characteristics of that particular property.

I had access to data on users, properties and bookings which I merged together. I then started engineering features, for example, on the travellers’ side:

- I extracted whether the prospective traveller had provided their email address and / or phone number (which indicates a certain legitimacy of the booking enquiry)

- I extracted the length of their message (Airbnb “design for trust” in suggesting the length of messages through the box size – too short a message may not show enough effort but too long a message may scare off a host!)

The characteristics of the property, such as having a step-free bathroom or a pool, were already well-delineated into features so there wasn’t much more to add. Such characteristics are particularly interesting in this case given that disabled people often find it difficult to find goods and services that meet their accessibility needs. I did add, however, a feature which indicated whether the property was popular – Accomable doesn’t communicate to travellers which are the most popular properties and so I used this feature as a proxy for underlying quality which isn’t observed in our dataset (and which makes it more likely to get a unbiased consistent estimator on the other variables).

I also extracted some features of the booking itself, for example:

- The time between the booking enquiry and the check-in date (with the rationale being to understand whether last minute or pipe dream travel plans contributed more to the number of bookings).

- The time of day that the traveller sent the booking enquiry (with the rationale being to understand the booking behaviour of the traveller e.g. are they getting bored at work in the mid-afternoon and being a holiday?)

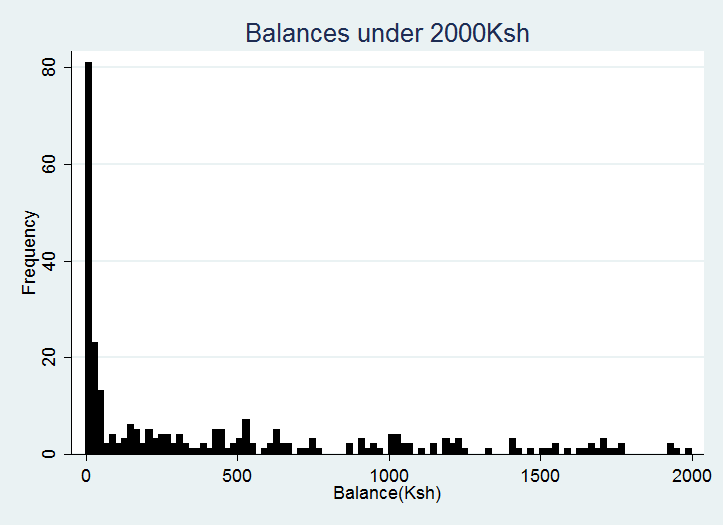

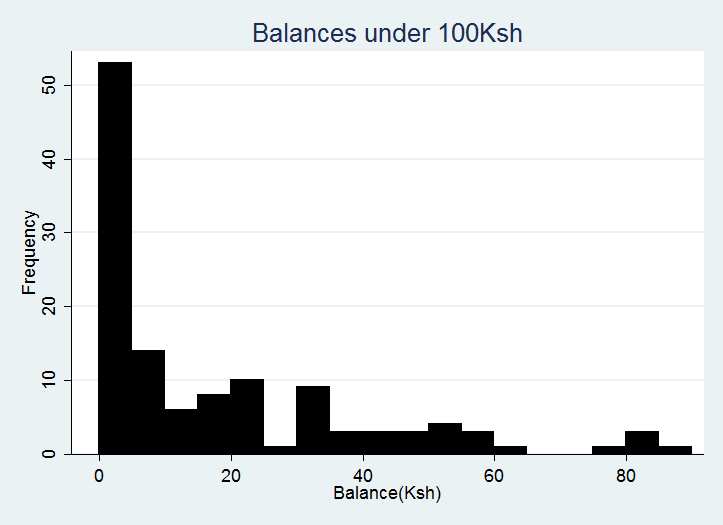

I then tried out a number of OLS regression models for the following dependent variables: the number of orders per traveller, the number of bookings per property and the price. The adjusted R squared terms ranged from 0.15 to 0.44 with the price models being the least stable (in terms of the coefficients varying greatly upon the inclusion of additional variables).

Findings

- Bookings per traveller is higher if the traveller had booked a popular property (most popular that month) in the past, and also if they provided their email and their phone number (likely to be indications of seriousness of interest)

- The number of bookings a property receives is much much higher when it’s a swap (this could be because it may be easier to trust that the property is suitable if the traveller is swapping homes with a host they know to have similar accessibility requirements), and when there’s an electric bed and a ceiling hoist (this suggest a scarcity in supply of such properties relative to demand). Properties with an electric bed have, on average, 2.2 more bookings than properties without an electric bed.

- Bookings were most popular at 12noon at 7pm, suggesting lunchtime and evening browses!

Recommendations

I recommended to Accomable to focus on recruiting properties which allowed a home swap, and also which catered for travellers requiring the more intensive accessibility features such as electric beds and ceiling hosts, which other websites are unlikely to be able to cater for. I also recommended focusing marketing emails during the popular booking times.